PIPELINING

A pipeline is a set of data processing elements

connected in series, so that the output of one

element is the input of the next one.

Pipeline Logic

---> A clock drives all the registers in the pipeline. This clock causes the

- CLC output to be latched in the register which provides input to the next stage, and thus making a start of new computation possible for next stage.

---> The maximum clock rate is decided by the time delay of the CLC in the stage and the delay of the staging latch.

PIPELINING ANALOGY

Pipelined laundry: overlapping execution

◦Parallelism improves performance

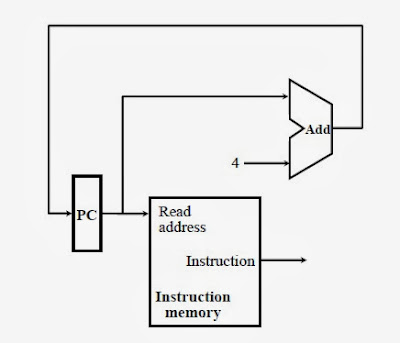

FIGURE 1

MIPS PIPELINE : Steps in Executing an Instruction

• Instruction Fetch (IF)

– Fetch the next instruction from memory

• Instruction Decode (ID)

– Examine instruction to determine:

– What operation is performed by the instruction (e.g., addition)

– What operands are required, and where the result goes

• Operand Fetch

– Fetch the operands

• Execution (EX)

– Perform the operation on the operands

• Result Writeback (WB)

– Write the result to the specified location

PIPELINE PERFORMANCE

---> Assume time for stages is

- 100ps for register read or write

- 200ps for other stages

---> Compare pipelined datapath with single-cycle datapath

FIGURE 2

PIPELINE PERFORMANCE (CONT...)

FIGURE 3

PIPELINE SPEEDUP 1

--->If all stages are balanced

◦ all take the same time

◦Time between instructions(pipelined)

= Time between instructions(nonpipelined)/ Number of stages

--->If not balanced, speedup is less

Speedup due to increased throughput

◦Latency (time for each instruction) does not decrease

Ideally 5 stage pipeline should offer nearly fivefold improvement over the 800 ps nonpipelined time.

Refer to figure 3, the example does not reflect fourfold improvement for three instructions

◦2400/1400 ≈ 1.7

Add 1,000,000 instructions, each add 200 ps to the total execution time,

◦Total execution time = 1,000,000 x 200 ps + 1400 ps

= 200,001,400 ps

◦Nonpipelined total execution time

= 1,000,000 x 800ps + 2400ps

= 800,002,400 ps

◦Speedup = 800,002,400/200,001,400

≈ 4

PIPELINE SPEEDUP 2

FIGURE 4

Critical Path for Different Instructions

HAZARD

---> Situations that prevent starting the next instruction in the next cycle

---> 3 type of hazard are:

Structure hazards

◦A required resource is busy

Data hazard

◦Need to wait for previous instruction to complete its data read/write

Control hazard

◦Deciding on control action depends on previous instruction

STRUCTURE HAZARD

--->Conflict for use of a resource

--->In MIPS pipeline with a single memory

◦Load/store (pipelined) requires data access at the same time

◦Instruction fetch would have to stall for that cycle

- Would cause a pipeline “bubble”

--->Solution, pipelined datapaths require separate instruction/data memories

◦Or separate instruction/data caches

DATA HAZARD

An instruction depends on completion of data access by a previous instruction

- add $s0, $t0, $t1

- sub $t2, $s0, $t3

FIGURE 5

SOLUTION : FORWARDING (aka Bypassing)

--->Use result when it is computed

◦Don’t wait for it to be stored in a register

◦Requires extra connections in the datapath

FIGURE 6

LOAD-USE DATA HAZARD

FIGURE 7

CODE SCHEDULING TO AVOID STALLS

Reorder code to avoid use of load result in the next instruction

C code for A = B + E ; C = B + F ;

FIGURE 8

CONTROL HAZARD

--->Branch determines flow of control

- Fetching next instruction depends on branch outcome

- Pipeline can’t always fetch correct instruction

Still working on ID stage of branch

--->In MIPS pipeline

- Need to compare registers and compute target early in the pipeline

- Assume, add hardware to do it in ID stage, test registers, calculate branch address & update PC during 2nd stage of pipeline.

SOLUTION 1 : STALL ON BRANCH

---> Wait until branch outcome determined before fetching next instruction

FIGURE 9

Figure 9 shows a pipeline showing stalling on every conditional branch as solution to control hazards.

This example assumes the conditional branch is taken, and the instruction at the destination of the branch is the OR instruction.

SOLUTION 2 : BRANCH ON PREDICTION

---> Longer pipelines can’t readily determine branch outcome early

- Stall penalty becomes unacceptable

--->Predict outcome of branch

- Only stall if prediction is wrong

--->In MIPS pipeline

- Can predict branches not taken

- When you are right, fetch instruction after branch, with no delay

MORE - REALISTIC BRANCH PREDICTION

--->Static branch prediction

- Based on typical branch behavior

- Example: loop and if-statement branches

Predict backward branches taken

Predict forward branches not taken

--->Dynamic branch prediction

- Hardware measures actual branch behavior

e.g., record recent history of each branch (as taken or untaken branch)

- Assume future behavior (predict from past behavior) will continue the trend

When wrong, stall while re-fetching, and update history

BY AINI KHAIRANI BT AZMI